dkonigs

Subscriber

It appears to me that you are determining the initial exposure (time) based on measurements of one or more points on the image. Here's an idea: Have the user specify both grade and exposure-time. Then, knowing the paper profile for that grade, you can simply plot the resulting tones on the horizontal scale and let the user slide them left or right using the encoder knob, changing exposure-time until the tones are what he wants. Or did I misunderstand something?

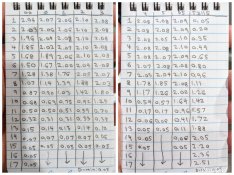

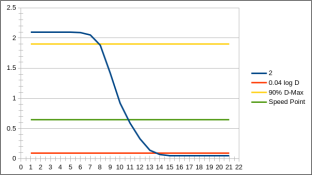

So I spent some time yesterday taking a closer look at exactly how my RH Analyser does this, and experimenting with many of these ideas with a standalone scratch program. The way the RH device works, it takes the lowest measurement at picks a time to place it at the D=0.04 point on the scale. It then simply displays where all the other measurements fall relative to it on the tone graph. So, there's really no averaging or middle-grey selection going on at all. I think I'm most likely going to go with this approach for starters, because it is simpler. I'm also thinking of dropping the second of my three ideas from above, as I'm not sure its useful or accurate.

The whole process looks something like this:

- Take measurements of the image

- Pick a "base time" that correctly places the lightest tone

- Show a "tone graph" that places tones for all the measurements relative to that base tone

- This tone graph is based on the paper profile for the currently selected contrast grade

- If the profile just has "base time + ISO(R)" then this graph is based on the contrast range

- If the profile has all the numbers, interpolate the full characteristic curve and base the graph on the density range

- If the user adjusts the exposure time, the measurements are re-plotted on the tone graph accordingly.

- If the user changes the contrast grade or paper profile, the tone graph is recomputed and the base time is adjusted

So yes, the user can change the contrast grade quite easily. I'm not picking it automatically or forcing it.

Also, the time is fully adjustable. I have the concept of a "base time" and a "time adjustment" internally, where metering just picks the "base time". So if you take readings, get a base time, "add 3/4 stop", and then do something that picks a new base time... you'll get that new base +3/4 stop.

I know this is far better explained in pictures or videos, which I should put together sometime after I'm finished actually building it out.

I do have the ability to explicitly set the time in seconds, but it is mostly intended for cases where you have a specific exposure you want to repeat and already know your starting point. It works something like this:Would you offer the user the option of entering exposure-time in seconds? I am building an LED controller based on an Arduino, and I put a simple timer function in it. Time is set (using the encoder knob) in tenths of stops, ranging from 0 (1 sec) to 9.9 (955 sec). I display the resulting seconds, but they are seldom nice integers (such as 15 or 20) that most folks are accustomed to. You and I are engineers, so we like this design, but I wonder whether it will repel most people. So you might consider letting the user enter seconds.

- Up/Down arrows adjust the time in the currently-selected increment (e.g. "1/4 stop")

- Click the encoder knob and use it to explicitly pick an offset in the finest increment (units of 1/12th stop)

- Long-click the encoder knob and use it to directly set a number of seconds (this also resets the whole base-time/adjustment time thing right now, and isn't intended to be the "standard case")

The whole point of this device is to be an "f-stop timer", so I have no intention of working in plain-seconds. I know the numbers start to look weird, but that's how all of these things work. The whole point is that its easier than trying to calculate them yourself.

No, I really do not want to do this. If you want a "universal do-everything darkroom timer" there's always the Maya project. Process timing has very different constraints, and is actually something a smart phone app can do a better job of than a standalone appliance if you need "fancy features".Suggestion: Add a "Process" button which merely causes the display to count up seconds (and minutes), starting from 0, displayed in large characters on your 64-row OLED module. This would be trivial to add, and would let your unit function as a process timer for developing/stopping/fixing/etc. I know you don't want to make this a process timer, but the function would be useful if you use large characters to make them readable from the wet side of a large darkroom.

I'm already displaying enlarger timing in pretty large characters which are quite visible. The timer numbers only get smaller in test-strip mode where there's other info to display that takes up space. However, the simple fact that the device is probably laying flat on the table would make it hard to really observe from the other side of the room.

(Its a 256x64 graphic OLED, so I can make it display whatever I want for whatever mode it is in.)

I already have like 11 buttons on the device (14 if you count the meter probe, footswitch, and blackout switch). Their function isn't inherently fixed, and they can do different things as needed. I even have simple button combos for things like changing modes, though I'm sticking to more obvious combos there. (Up/Down at the same time to change the stop increment, Left/Right at the same time to select the device "mode"). So I don't really see the point in adding more buttons unless I have an actual need/use for them.Suggestion: Put three buttons above and three buttons below the OLED display to function as soft keys. That's what I did on my LED controller, and they are great! I'm using them to select things (such as which LEDs to change) and to set modes. Your device is more sophisticated than my controller, so I think it would benefit even more from this soft-key approach.

Oh yeah, and you can actually plug in a USB keyboard to make it easier to type paper/enlarger profile names

(But you can still do it without one, its just a little bit more cumbersome.)