Thank you, this is very helpful.Not a simple answer, but consider the following:

Now back to the negative, since it not designed to viewed by the human eye there is no need for any specific color to represent the primaries, all you need is for those primaries to be "viewable by the print stock" you could for example have one dye that restricted UV light, one dye that restricted visible light and one dye that restricted infra red. Now if they could actually build such a system with widely separated dyes and print stock that could see these dyes, it would work perfectly and you would also not need any masking. You would not be able to scan the film with conventional DSLR though. The original technicolor actually just used three rolls of black and white film, so this concept has been used before.

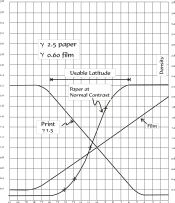

The interaction between color negative and positive mimics part of this concept with the red sensitive layer. The Blue and Green layers of the print stock have similar spectral sensitivity to traditional camera negative, and are also close to spectral sensitivity of the Blue and Green layers of your DSLR. These are the layers that also have the masking, to reduce cross talk from adjacent dyes. The Red sensitive layer of the print stock is different from both negative film, a DSLR and anything designed to match the human eye in some way. It has for example a blind spot which allow the use of color safelight, right where the peak sensitivity of red sensel of your DSLR is and not far from the peak sensity of the cones in your eye sensitive to long wavelenght light. And the peak sensitivity of the red layer of the paper is where the infrared layer of your DSLR cuts our most of the light. Having the red sensitivity peak around 700nm reduces cross talk as the red layer cannot also have a mask. i.e. you can't have a three colour mask.

So every triplet from you DSLR is slightly wrong, This cannot be corrected just with just a 1D transformation, the 10bit cineon system can represent 107 million triplets, the 10bit log encoding was sized to fit dynamic range of color negative film. Now there is no need nor is it possible to measure the correction needed for all 107 million triplets, but you need more than 1D transformation using a curves to model the system.

OK. So let's iterate my proposed model once more. We DSLR scan the negative in a linear representation, then multiply the green and blue channels to render the film rebate black (i.e. account for the differing speeds PE pointed out). Now, you say the blue and green channels of the DSLR scan should be more less "right" at this point, since the spectral sensitivities are nearly the same as the paper. So I would expect to see at this point, if I shot a greyscale target, neutrals that have representation (r,b,g) where b=g (roughly) and r is some crazy number due to the different spectral response. At this point it remains to correct the red. Since b=g (roughly) and we know their values are correct (roughly), any 3x3 matrix transform is going to boil down to computing r_new = r_old + c*g for some coefficient c. This can be done with some coding, or just by appropriately adding the channels in photoshop. I can choose c to minimize the shift from perfect neutrals along a greyscale, say.

I suppose at this point I need to start empirically evaluating my ideas, and perhaps searching the academic literature on automatic color correction. But, what do you think of this? Obviously it's just a linear model, but presumably the nonlinearities of the system aren't so bad.

(The comment the book makes about the DSLR response following the Status M response is somewhat confusing and I'm deferring to your more precise answer here.)

Last edited: