- Joined

- Oct 11, 2006

- Messages

- 2,190

- Format

- Multi Format

We have probably all heard of color rendering index (CRI), and that the closer the CRI of a light source is to 100 the better the light source is for good visual rendering of color. However, I just learned that there are some other measures of color quality. Here's where I got the first inkling that there is more to it than CRI, although I intuitively knew that there must be something beyond just CRI. https://www.waveformlighting.com/fi...-important-for-skin-tones-a-spectral-analysis.

I haven't quite figured it all out yet, but I gather that R9 has something to do with getting accurate reds, and that this is a weakness of many light sources. Apparently this is particularly important for getting good skin tones.

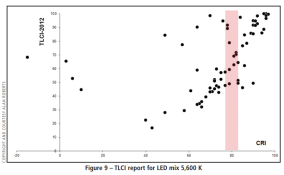

As I started surfing the internet some more I learned that there is something called Television Lighting Consistency Index (TLCI), which is kind of like CRI except optimized for video cameras with digital sensors. Apparently, a high CRI light source does not necessarily give good color rendering for modern video cameras, but a high TLCI does give good color rendering for modern video cameras. (I assume, perhaps naively, that a high TLCI light source would also be good for digital still cameras, but that's actually a topic for a different time and place.)

Anyway, I did not raise this to discuss digital imaging. I only use that as a point of reference to discuss the idea that different sensors (the eye being one of them) require different specifications when it comes to quality of different light sources. This brings me to the relevant question regarding optimal light sources for film cameras. Is there something like CRI and TLCI that is intended for film cameras? The reason I ask is that I don't think we could necessarily assume that a high CRI light source would give optimal color reproduction on film because the spectral response of film (in both exposure and viewing) is not the same as the human eye. the same goes for high TLCI light sources. They probably aren't optimized for film either. Does anybody know anything more about this topic, so you can teach us about it?

I haven't quite figured it all out yet, but I gather that R9 has something to do with getting accurate reds, and that this is a weakness of many light sources. Apparently this is particularly important for getting good skin tones.

As I started surfing the internet some more I learned that there is something called Television Lighting Consistency Index (TLCI), which is kind of like CRI except optimized for video cameras with digital sensors. Apparently, a high CRI light source does not necessarily give good color rendering for modern video cameras, but a high TLCI does give good color rendering for modern video cameras. (I assume, perhaps naively, that a high TLCI light source would also be good for digital still cameras, but that's actually a topic for a different time and place.)

Anyway, I did not raise this to discuss digital imaging. I only use that as a point of reference to discuss the idea that different sensors (the eye being one of them) require different specifications when it comes to quality of different light sources. This brings me to the relevant question regarding optimal light sources for film cameras. Is there something like CRI and TLCI that is intended for film cameras? The reason I ask is that I don't think we could necessarily assume that a high CRI light source would give optimal color reproduction on film because the spectral response of film (in both exposure and viewing) is not the same as the human eye. the same goes for high TLCI light sources. They probably aren't optimized for film either. Does anybody know anything more about this topic, so you can teach us about it?