@Stephen Benskin I agree with your interpretation of the Varden paper. My feeling was that some industry insiders were pushing for the lowering of the safety factor. We can only speculate on their motivations, beyond the sensitometric case they try to make. If photographers in the 1940s and 1950s were anything like today, the change may have been enticing to both manufacturers and consumers. And, yes, the irony struck me, too. It's strange (and fascinating) how these trends have changed over time.

I find some of these trade journal articles insightful because they provide some of the more applied side of sensitometry and photography, in general. The lack of peer review means we have to take their claims with a grain of salt, but that's okay, in my book, at least for some of the claims.

@ic-racer I think I can agree with your general take on the w speed and Delta-X speed calculations from the Nelson and Simonds paper. They can be a bit of a moving target. The simple least squares model for estimating the Delta-X and w speed equations would probably be questioned by a discerning statistician today, but, for most of us, I think they are good enough approximations. I hope to one day run a script that would go through all of my film data and compare the different film speed models, but I need to find the time to actually do it.

By the way, the numerical method of calculating the Jones 0.3G speed needs to account for the entire curve in order to find the local (fractional) gradients along its trajectory, including any of the non-linearities in the mid-tones. It can always be improved, no doubt. My goal with this software is to ultimately offer users a few different methods for most of the computed parameters, so as not to make it too opinionated. I think the otherwise excellent Win Plotter app could have been much better received if it had some of those options available. The way it is now, you kind of have to follow the BTZS system, or, at the very least, the Zone System, to get most benefit out of it.

Speaking of the Jones fractional gradient method, the notion of sensitometer light quality comes up a few times. In the Jones and Russell (1935) paper that

@Stephen Benskin mentioned above, the sensitometer light quality is described as:

"The radiation used in making the sensitometric exposure shall be identical in spectral composition to that specified by the Seventh International Congress of Photography in defining the photographic unit of intensity for the sensitometry of negative materials." (p. 410)

I must admit, I have no idea what that means because I failed in obtaining the source, listed as "Proc. VIIth Int. Cong. Phot. (1928), p. 173." but Jones brings up the idea again in the 1949 paper as

"The quality (spectral composition) which has been adopted by international agreement for sensitometric measurements on negative materials is that emitted by a specified tungsten lamp-filter combination that corresponds to the quality of mean noon sunlight in Washington, D.C." (p. 132).

I have come across a couple of mentions of the fact that such a light source is not as actinic as actual daylight, and that a factor of 1.3 should be considered to make sensitometer-derived data more compatible with real-world applications. It's a pretty obscure detail, but I thought I'd bring it up to see what you guys think of this. My sensitometer has a white (as white as could be currently obtained) and green LED light source. My DIY device uses an incandescent bulb with an 80A filter. Neither is ideal, but I wonder how much of an error they introduce compared to the devices used in the 20th. century sensitometry research.

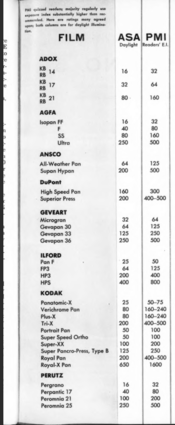

I should also add, Zone System speeds tend to agree with the ASA Exposure Indexes that the article calls Dangerously Safe.

I should also add, Zone System speeds tend to agree with the ASA Exposure Indexes that the article calls Dangerously Safe.