... I'm not sure how to convert a series of data points into an accurate equation so I can describe the curve. This is needed to draw the curve, and more importantly pull off accurate intermediate values especially in the toe and shoulder areas.

I know I could just interpolate between points, but that isn't very accurate except in the straight line portion.

So, any math majors out there who can point me to the right curve fitting algorithm?

I'm not a math major, but I have had courses in numerical analysis, and I have dabbled with curve fitting a bit over the years, including fitting of characteristic curves for my own amusement and for film testing.

There are several concepts you should keep in mind. First, there is probably no mathematical function that is perfectly adapted to the fitting of characteristic curves, so you are going to have to chose between the lesser of evils.

Second, make sure you are "fitting" the curve rather than "interpolating". By this I mean that you should seek a smooth curve that goes "close to" your data points without trying to go "through" all the data points. The reason is that experimental data has "noise", i.e. statistical uncertainty. If you try to find a function that goes through all the points you will actually be producing a lower quality result than if you find a smooth curve that goes close to the points. In effect the noise will be the killer.

Third, you should try to find a fitting curve that has a functional form (i.e. "shape") that looks quite a bit like the curve you would get if you had densely packed noiseless data from your experiments. There are several reasons for this, but the most important reasons are that if your fitting function looks a lot like the underlying functional form of the data your errors are likely to be lower than if you used functions that do not look much like the underlying functional form of the data, and also you will be able to fit the data with the fewest number of adjustable parameters.

The paragraph above implies that the optimal functions are probably not polynomials or cubic splines. Those functions do not have a shape that naturally mimics the shape of a characteristic curves, and they will require you to use more adjustable parameters than if you started with more suitable curves. Furthermore, you are more likely to encounter "pathalogical" behaviors with those functions. Polynomials are especially bad in this regard, and can often produce poor results for data having asymptotic tails (like the toe of the characteristic curve) and noise. What can happen is that in order to get an apparently good fit to the data you will have to use a high order polynomial, and then your equation may tend to have wild oscillations between the data points and in the range outside the data. In fact, I can guarantee you that a finite order polynomial will not fit the toe-end of the curve if you were to extend it to zero exposure.

Cubic splines are probably better, and according to PE have been used successfully. However, there are still some potential pitfalls. Each segment of a cubic spline requires four adjustable parameters (i.e. "degrees of freedom"). This means that for a single segment fit you have to have more than four points in the data set. If you have exactly four points then you are "interpolating" the data, i.e. making the curve go through every point, and then you become susceptible to noise and oscillations between the points. (If you have less than four points then there is not a unique answer, i.e. there is an infinite number of different answers that would all pass through every point of the data, but I digress.) For every additional segment of spline you include in the fit there is a requirement that you have more data points. It is not four additional points for each segment because you use up some degrees of freedom when you constrain the curve to match the ends between the segments in certain ways, but nevertheless you will need to have a lot of points if you use very many segments in a cubic spline fit.

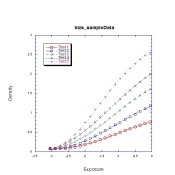

A more suitable set of functions would be the integral of some kind of bell-shaped curve, or in some cases the sum of more than one such curve. There are a lot of possibilities you could try, but I have had some luck using the integral of scaled forms of so-called "Gaussian" curves, also known as the normal distribution in statistics. If you only want to fit the toe of the curve along with part of the curve somewhat approaching the linear region then you might be able to get away with a single (integrated) Gaussian curve, plus an additive constant (offset) representing base+fog. If you can determine base+fog separately then you can subract it from the data and dispense with the additive constant in your fit. In the first version (including an adjustable constant offset) you would end up with four adjustable parameters, three of which come from the Gaussian function and one of which is the offset. If you use the second version then you have three adjustable parameters, all from the Gaussian function.

I would bet money that you will end up with a much better fit this way than if you started with polynomials or cubic spline functions having the same number of degrees of freedom. When I say "better" I mean that it is likely to be a truer representation of the underlying physical function and less subject to noise. Speaking of noise, this general scheme results in a smoothing effect which tends to minimize the effect of noise on the quality of the final result.

You can also add more Gaussian functions to the fit. There are positive and negative consequences to doing this, which I will not go into right now.

In fitting a function you will need to pick a so-called "objective function" which provides a measure of the goodness of fit. There are a lot of choices, but the overwhelming favorite is to minimize the sum of the squares of the differences between your data and the fit. These differences are called "residuals."

One disadvantage of the scheme I outlined above is that you will end up doing a so-called non-linear fit, whereas with certain other combinations of functions (e.g. polynomials) and objective functions (e.g. sum of squares of residuals) you only have to solve a linear set of linear equations. In addition, the scheme I outlined can be sensitive to the quality of your initial guess, and can converge to a "bad" solution if the initial guess is way out of line. However, with modern computers it is not too difficult to do non-linear fits, and it is not too difficult to pick an initial guess that will converge to the right solution.

My favorite program for doing non-linear least squares fits to user-supplied functions is called "psi-plot". It does most of the math for you. However, I don't think it would interface well to the problem you have in mind due to the issue of putting the data in and getting the results out in a convenient way. "Excel" might have the ability to do non-linear least squares, and some other vector-oriented packages no doubt have this capability as well. However, you might have to end up writing your own program, which would be somewhat of a pain in the neck.