albada

Subscriber

When programming my LED-head controller, I discovered that if a paper has a low Dmax, its grade is higher than a paper having a normal Dmax and (importantly) the same contrast in the midtones. Same contrast; different grade. We have a problem.

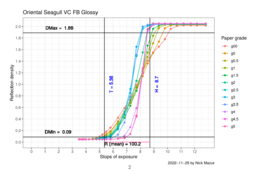

This anomaly occurs because grade is based on LER, which is the log of exposure range corresponding to densities 0.09 and 0.9*Dmax. Because the paper's Dmax is low, its LER is lower, causing its computed grade to be higher.

This is a problem with a LED controller because if you specify grade 2 (for example) for your print, you'll get different contrasts for Ilford (Dmax=2.10) vs Foma (Dmax=1.87). In effect, the term 0.9*Dmax lets Foma cheat and claim higher contrast than it actually achieves.

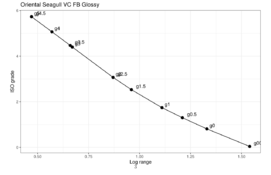

In order for different papers to print at the same contrast (and thus look the same) for the same grade, it seems to me that grade should be based on the slope of the central portion of the paper's HD curve. I see two ways to do this:

* Determine LER between fixed densities of zones VII to III (i.e., densities of 0.19 and 1.61). This solution assumes 1.61 is off the shoulder for all papers. Grade can be determined by LER as in the past, but the thresholds for grades would change.

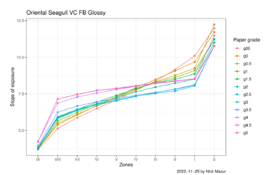

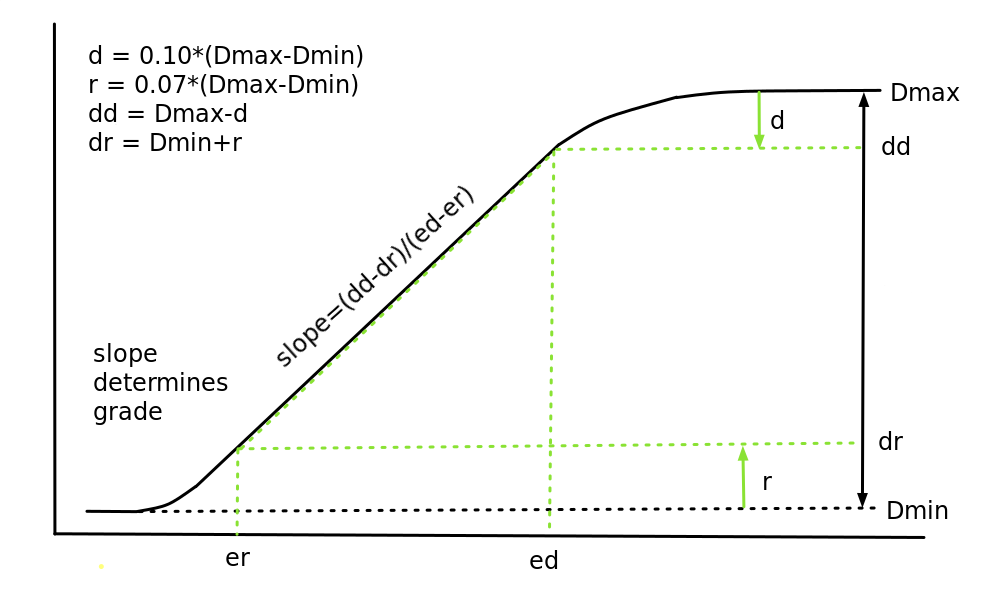

* Determine LER between variable densities computed as fractions involving Dmin and Dmax, and base grade on the slope, as illustrated below.

The constants 0.07 and 0.10 match zones VII and III when Dmax=2.1, but we can use 0.10 or somesuch for both -- I doubt it matters. Grade would be determined by slope and not by LER.

I believe this anomaly has not been a problem in the past because most people use tungsten lamps with filters, and as long as Foma yields the same visual contrast as Ilford with the same filters, users are happy. But when we apply the standard definitions to make LER (and thus grade) the same between papers via a microcontroller, the papers don't respond with the same contrast. I think this problem will become more serious as LEDs become more popular.

Your thoughts about this problem and my proposed solutions?

This anomaly occurs because grade is based on LER, which is the log of exposure range corresponding to densities 0.09 and 0.9*Dmax. Because the paper's Dmax is low, its LER is lower, causing its computed grade to be higher.

This is a problem with a LED controller because if you specify grade 2 (for example) for your print, you'll get different contrasts for Ilford (Dmax=2.10) vs Foma (Dmax=1.87). In effect, the term 0.9*Dmax lets Foma cheat and claim higher contrast than it actually achieves.

In order for different papers to print at the same contrast (and thus look the same) for the same grade, it seems to me that grade should be based on the slope of the central portion of the paper's HD curve. I see two ways to do this:

* Determine LER between fixed densities of zones VII to III (i.e., densities of 0.19 and 1.61). This solution assumes 1.61 is off the shoulder for all papers. Grade can be determined by LER as in the past, but the thresholds for grades would change.

* Determine LER between variable densities computed as fractions involving Dmin and Dmax, and base grade on the slope, as illustrated below.

The constants 0.07 and 0.10 match zones VII and III when Dmax=2.1, but we can use 0.10 or somesuch for both -- I doubt it matters. Grade would be determined by slope and not by LER.

I believe this anomaly has not been a problem in the past because most people use tungsten lamps with filters, and as long as Foma yields the same visual contrast as Ilford with the same filters, users are happy. But when we apply the standard definitions to make LER (and thus grade) the same between papers via a microcontroller, the papers don't respond with the same contrast. I think this problem will become more serious as LEDs become more popular.

Your thoughts about this problem and my proposed solutions?